A few months ago we launched Adeptiq, an applicant tracking system with AI features for CV parsing and candidate search. In this post I want to share the technical choices we made and why.

The short version: we built Adeptiq on a stack that is cloud-native, largely self-hosted, and European. For a product handling sensitive candidate data, this was a trivial choice — GDPR compliance, data sovereignty, and security aren’t afterthoughts when you’re processing people’s CVs.

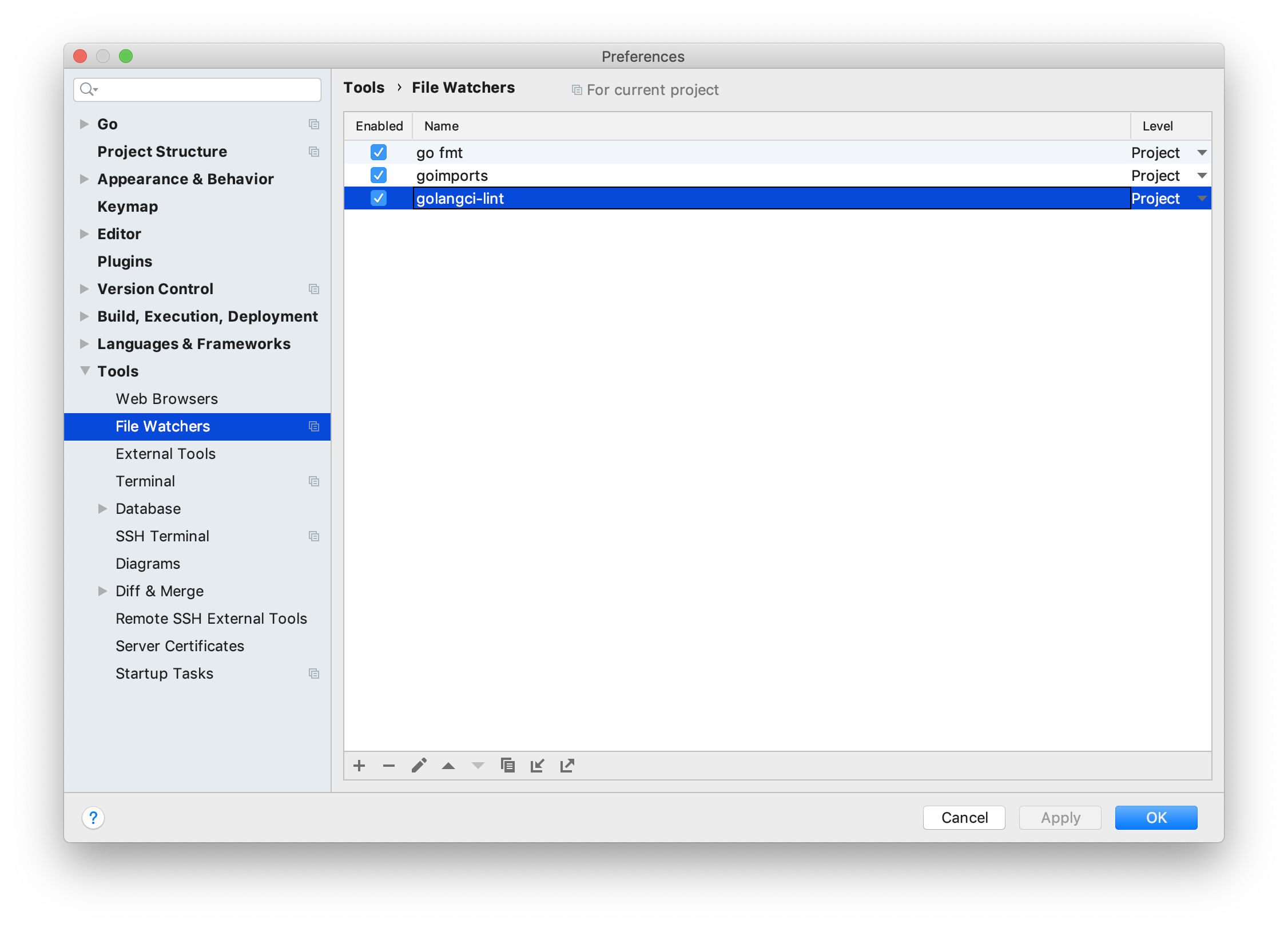

The stack at a glance

- Backend: Go + PostgreSQL + Echo

- Frontend: Vue.js

- Auth: Zitadel (self-hosted)

- AI: Mistral

- Infrastructure: Containers on Scaleway

- CI/CD: Forgejo (self-hosted)

Backend: Go + Postgres + Echo

Go was a no-brainer for me. I’m a freelance Go developer and have been writing Go professionally for years — it’s my go-to language for backend services. The reasons are well-known: fast compile times, easy deployment (single binary), great concurrency, and a standard library that covers most needs.

For the web framework we use Echo. It’s lightweight, fast, and stays out of your way. Nothing fancy — just a solid foundation for a REST API.

PostgreSQL is the database. Boring choice, but boring is good. It handles our relational data well, and the jsonb type is useful for storing semi-structured candidate data.

Frontend: Vue.js

The frontend is a Vue.js single-page application. Vue is pleasant to work with and has a gentle learning curve. Not much else to say here — it does the job.

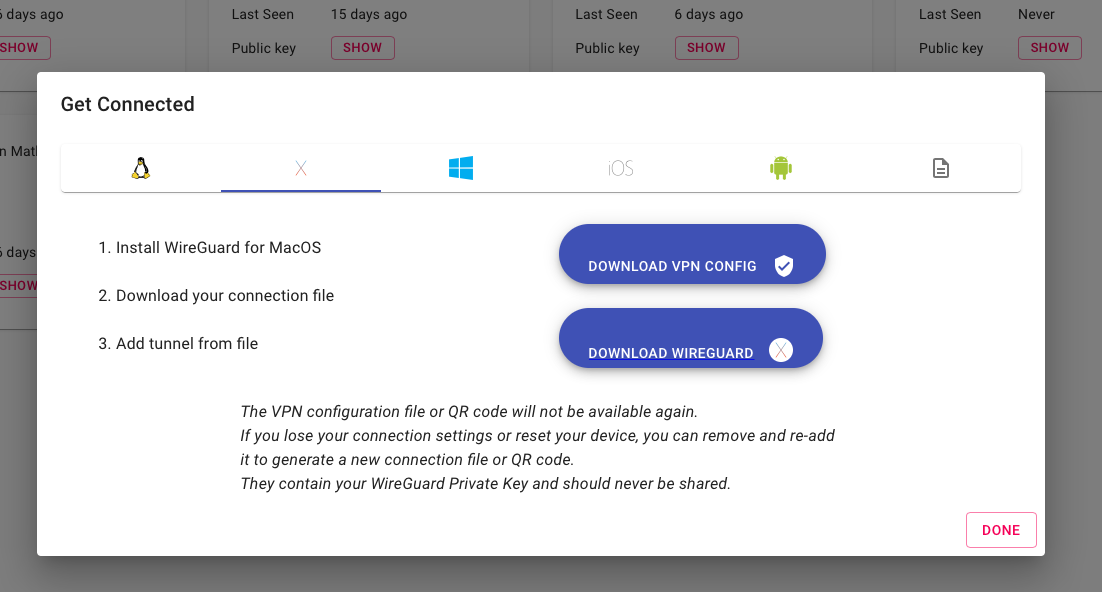

Authentication: self-hosted Zitadel

For authentication we use Zitadel, self-hosted. It’s a full OIDC provider that handles user management, login flows, and all the security features you don’t want to build yourself.

Why Zitadel over Auth0 or Clerk? A few reasons:

- Security out of the box: Zitadel gives us SSO and MFA without having to implement it ourselves. For a product that stores sensitive candidate data, we wanted to lean on a battle-tested auth solution rather than roll our own.

- Self-hosted: We control the data. No vendor lock-in, no usage-based pricing surprises.

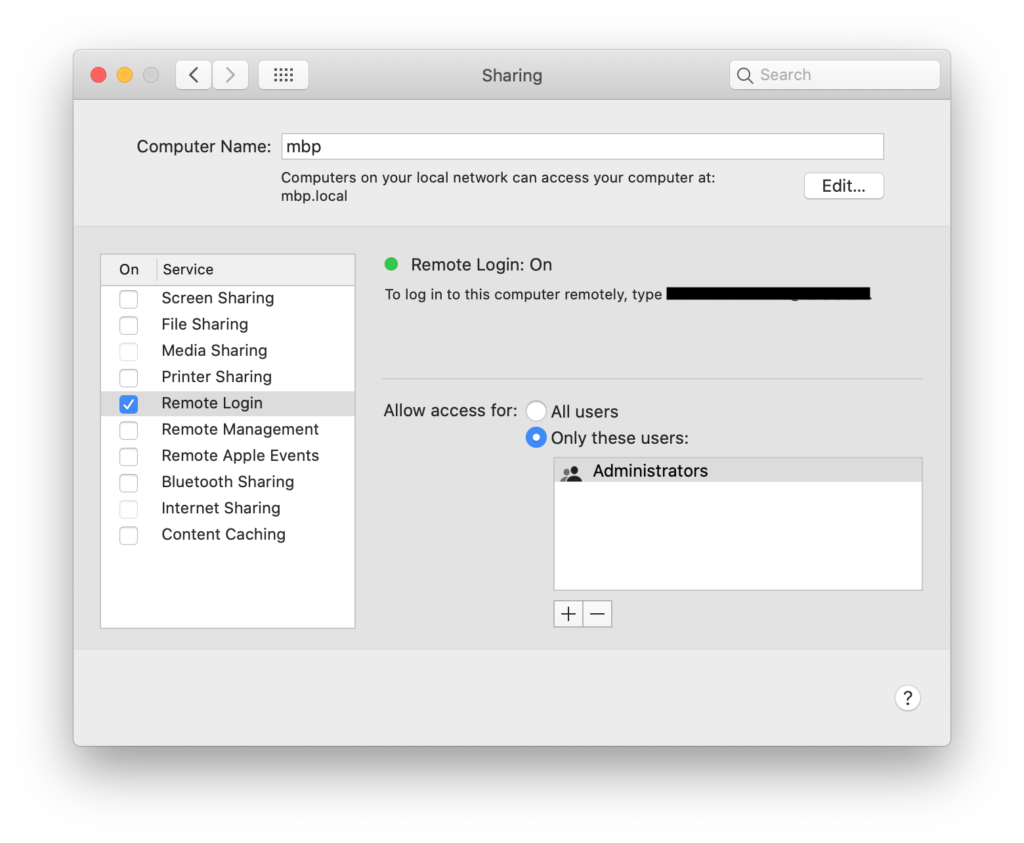

- Cloud-native: It’s designed to run in containers. Installation was straightforward.

- Lightweight: It’s written in Go and doesn’t need a lot of resources.

The tradeoff is that you’re responsible for running it. But if you’re already comfortable with containers and infrastructure, it’s not a big burden.

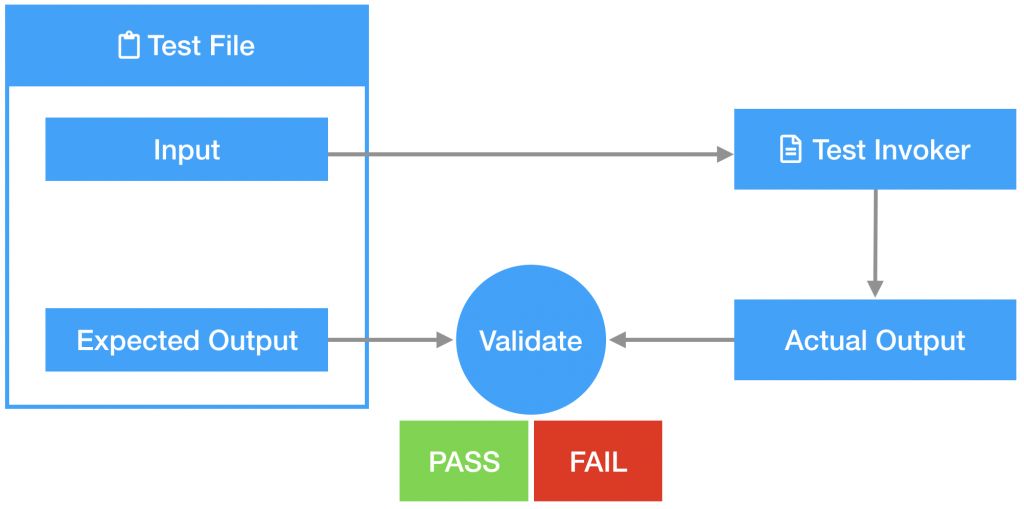

AI: Mistral for the smart bits

Adeptiq has two AI-powered features: extracting structured data from CVs, and searching candidates using natural language queries. For both we use Mistral‘s completion models.

One thing worth noting: we do text extraction and OCR ourselves before sending anything to the LLM. PDFs are parsed and images are processed on our own infrastructure. This keeps token usage down and means we’re not paying for the LLM to read badly scanned documents.

Why Mistral over OpenAI? This one was easy:

- European data residency: CVs contain personal data — names, addresses, phone numbers, work history. Under GDPR we have a responsibility to handle that data carefully. By using Mistral, we keep that data within Europe. No transatlantic transfers.

- Performance: For our use case — structured extraction and semantic search — Mistral performs very well.

- Pricing: Competitive, and predictable.

When solid European alternatives exist, why look elsewhere?

Infrastructure: containers on Scaleway

Everything runs in containers on Scaleway. We’re not using Kubernetes yet — just containers on managed instances, kept simple. Kubernetes will come once we need to scale further, but for now there’s no reason to add that complexity.

Scaleway is a French cloud provider. The pricing is transparent, the UI is clean, and European data residency is built-in. For a B2B SaaS handling candidate data under GDPR, knowing exactly where your data lives isn’t optional — it’s essential.

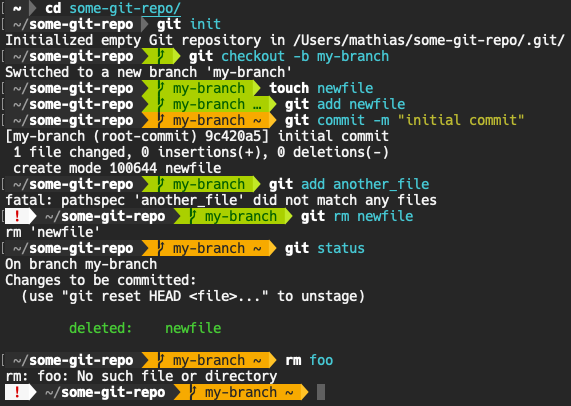

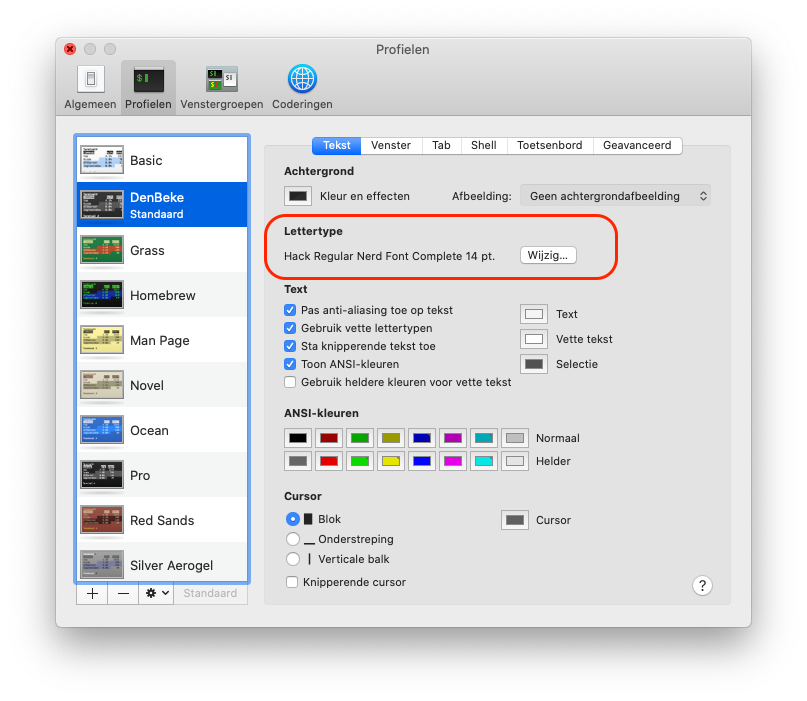

CI/CD: self-hosted Forgejo

For CI/CD we use Forgejo, self-hosted. Forgejo is a fork of Gitea, which itself is a lightweight Git hosting solution. It includes CI/CD runners that work well for our needs.

Why not GitHub Actions or GitLab? We already had Forgejo running for code hosting, and its built-in CI covers our use case. One less external dependency, one less place where our code leaves our infrastructure.

Wrapping up

Here’s the full picture:

| Layer | What we use | The “default” alternative |

|---|---|---|

| Cloud | Scaleway | AWS, GCP |

| Auth | Zitadel (self-hosted) | Auth0, Clerk |

| LLM | Mistral | OpenAI |

| CI/CD | Forgejo (self-hosted) | GitHub Actions |

Building on European infrastructure isn’t a compromise — it’s a competitive advantage. We get solid tools, GDPR compliance by default, and full control over where our users’ data lives. The tradeoff is more operational responsibility, but for a small team that knows its way around infrastructure, that’s a fair trade for independence.

If you’re curious about Adeptiq itself, check it out at adeptiq.be.